This has been a very exciting week with the Kronum project (kronum.com). Kronum is a new entry to the American sports scene. It's a mashup of several different sports (basketball, soccer, and others) played on a circular field outdoors. Over the past two weeks, we've seen our web traffic go from ~200 visitors per day to 13,000+. What happened to increase our traffic so dramatically and so quickly? The beauty of the viral web is what happened.

About 10 days ago, we got tweeted about from actor Rob Lowe and that's where it seems to have started. From there, Kronum ended up featured on Wired, then on ESPN Sportsnation's Facebook page and will soon be featured on the Sportsnation TV show as well as in ESPN the magazine. While the Lowe tweet got the ball rolling, the Wired article really upped the ante. We broke 30,000 visitors in the 24 hours after that story was launched.

All in all, this initial load was handled fairly well, especially given the nature of the site (more on that below). There were two server crashes during this traffic: one was a result of a corrupted CouchDB cached document and the other was Apache HTTPD failing because of too many concurrent requests. Both of which were easy enough to resolve.

However, the rapid spike in traffic and the consistent trending of Kronum across the intrawebs and other traditional media led the Kronum team to reevaluate their infrastructure. The team realizes that they are on the cusp of things really taking off and that what happened in the past two weeks was nothing compared to what is (hopefully) coming.

The Kronum League is about to begin their first recreation league session in Philadelphia, where the sport is located and headquartered, and its third official, professional season of the sport starts later this summer. The start of these seasons, coupled with the growing media and web attention, should provide an even greater spike in traffic over the coming months.

My part in all this is really small. I am the (only) guy responsible for three large areas of the application: back-end code, the database, and the servers. Okay, not really a 'small' part, huh?

Here's where we are today.

The Web and DB Servers Specs:

- Railo 3.2 (with BlazeDS)

- Apache Tomcat 7 (64-bit)

- Apache HTTPD 2.2.15 (32-bit)

- MS SQL Server 2008 Web edition

- Apache CouchDB 1.0.2 (for cache)

- Apache ActiveMQ 5.4.1 (for messaging)

The Code/Application:

- Back-end written in CFML with no framework

- Java used in specific spots for handling ActiveMQ messaging and connections

- CFTracker 2.1 to monitor memory and sessions on our application

- Front-end is a 100% Flex/Flash application

Server I:

- Win2K3 R2 64-bit (SP 2 installed)

- 8 GB RAM

- Intel Xeon Quad 2.33 GHz Processor

- ~400GB of disk space

- Primary role is that of the web server (Apache HTTPD, Apache Tomcat, and Railo)

- Secondary role is that of a DB server for our staging site

Server II (this server was added about a year after Server I):

- Win2K3 R2 64-bit

- 8 GB of RAM

- Intel Xeon 8 core 2.4 GHz Processor

- ~400GB of disk space

- Primary role is that of a DB server (SQL Server 2008)

- Secondary role is that of a web server for our staging site

As you can see, the server is pretty beefy, which might beg the question, "Why move and why move to AWS in particular?" The primary motivation is twofold: (1) a move to AWS will reduce the existing server costs by ~35% and (2) the application needs to be nimble for these spikes in traffic. It is this second motivation that is truly driving the change (though the fact that we could now run 2 web servers and 1 dedicated DB server for the same price as the current hosting cost doesn't hurt).

Disclaimer: I will not name our current web host because our transition has nothing to do with them. They have been fantastic to work with and do a great job of responding to requests, etc. The move is entirely to save some money and, as I said above, to be more nimble in times of heavy load.

The Application's Problems:

- The front-end of the site is built entirely in Flex/Flash and is VERY graphic intensive (it is not just large graphics ... it sends 100s of graphics to the client).

- The front-end is made up of 4.5 MB of Flash SWFs (the main SWF and accompanying modules), not counting the aforementioned images/graphics.

- Caching is barely used at all on the front-end (e.g., the homepage alone sends 6.3 MB of graphics to the client, of which only 847.7 KB worth are grabbed from cache.

- The back-end, which I inherited and unfortunately, had to follow, is a horrible mess of objects. There are remote services for Flex connections but these remote services then call internal services that then do all the work (and those services then call various DAOs, other services and VOs/Beans). Given that one of CFML's weaknesses is the speed at which objects are created we create a boatload of objects unnecessarily with each request.

- There are many expensive database calls and there is not a really great way around them (more sql-tuning, sure, but it's one of those "they are what we thought they would be" things ... ass kickers :)

- There is minimal caching on the back-end (not for my lack of wanting to implement it).

- We server all our images from the local web server (videos are streamed from a CDN)

The Server's Problems:

- They are pretty expensive (not to say that they are undervalued).

- It takes a good bit to get another server setup, configured and running (1-2 business days)

- We are locked into SQL Server 2008 Web edition, which lacks replication, unless we want to pay ~$17,000 for the Enterprise license on our two DB server installs.

- We prefer Apache servers and they simply do not run as well on Windows as they do on Linux).

- The Win2K3 OS takes away precious server resources not as readily lost in a Linux server.

- Security is tougher on Windows than Linux (note this is a comparative statement and not a statement that there are no security issues on Linux) and while this is handled admirably by our current host, it's still a point of concern, albeit a minor one.

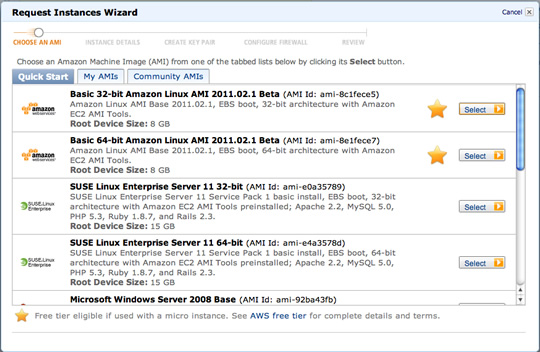

We are now in the process of migrating to Amazon Web Services (AWS). We have setup our initial machine image (AMI) with the following:

- Large Instance (64-bit, 7.5 GB RAM, 850 GB disk, 2 virtual cores with 2 EC2 compute cycles each, and high I/O performance)

- Ubuntu 10.10 Server 64-bit

- Sun/Oracle's JDK 1.6.0_24 64-bit

- Apache HTTPD 2.2.x (I forget the exact rev) 64-bit

- Apache Tomcat 7.0.12 64-bit

- Apache Tomcat Native Connectors 64-bit

- Apache CouchDB 1.0.1 64-bit

- Apache ActiveMQ 5.5 64-bit

- Railo 3.3 (w/ BlazeDS, of course)

- VSFTP

- openSSL

- The code base

- EBS volume tied to our AMI

Next up, we'll be making the following changes and migrations:

- Migrate the SQL Server 2008 DB to MySQL 5.1.x running on Amazon's RDS, which supplies replication and backups as part of the service as well as failover protection! In the short run, we will continue to use our SQL Server DB from our existing server with the new site server.

- Migrate sending mail from the application to Amazon SES.

- Begin to serve our images and other static content from S3 (it's already been set up on S3).

- Utilize Amazon's CloudWatch service to auto-scale the site as needed.

- Setup the Elastic Load Balancer.

Over the next couple of weeks, I'm going to be doing some posts in the following categories:

- Getting started on AWS for CFML applications (i.e., building your server, installing software, configuring the server for security and performance, getting ActiveMQ and Tomcat to talk)

- Migrating from MS SQL to MySQL on RDS

- Load testing your site

- Scaling on the cloud

- Kick-ass OSS CFML applications to help you run a better site (CFTracker and Hoth in particular)

- Refactoring your CFML code (i.e., transition to S3 for serving static content, cache the shit out of your app, get rid of overly complex OOP in favor of lighter and nimbler OOP, integrating ORM into an existing, non-ORM application, tuning DB queries when ORM is not enough)

This migration is a pretty huge and really cool project; one that I am very excited to be a part. If there is something else involved in making such a transition please let me know and I can add it to my list of forthcoming posts. If you have any other questions on what we're doing and why, please don't hesitate to send me a note or drop a comment.